Curaçao

Japanese Garden-Red Sea

Abstract

We introduce OceanSplat, a novel 3D Gaussian Splatting-based approach for high-fidelity underwater scene reconstruction. To overcome multi-view inconsistencies caused by scattering media, we design a trinocular setup for each camera pose by rendering from horizontally and vertically translated virtual viewpoints, enforcing view consistency to facilitate spatial optimization of 3D Gaussians. Furthermore, we derive synthetic epipolar depth priors from the virtual viewpoints, which serve as self-supervised depth regularizers to compensate for the limited geometric cues in degraded underwater scenes. We also propose a depth-aware alpha adjustment that modulates the opacity of 3D Gaussians during early training based on their depth along the viewing direction, deterring the formation of medium-induced primitives. Our approach promotes the disentanglement of 3D Gaussians from the scattering medium through effective geometric constraints, enabling accurate representation of scene structure and significantly reducing floating artifacts. Experiments on real-world underwater and simulated scenes demonstrate that OceanSplat substantially outperforms existing methods for both scene reconstruction and restoration in scattering media.

Video Presentation

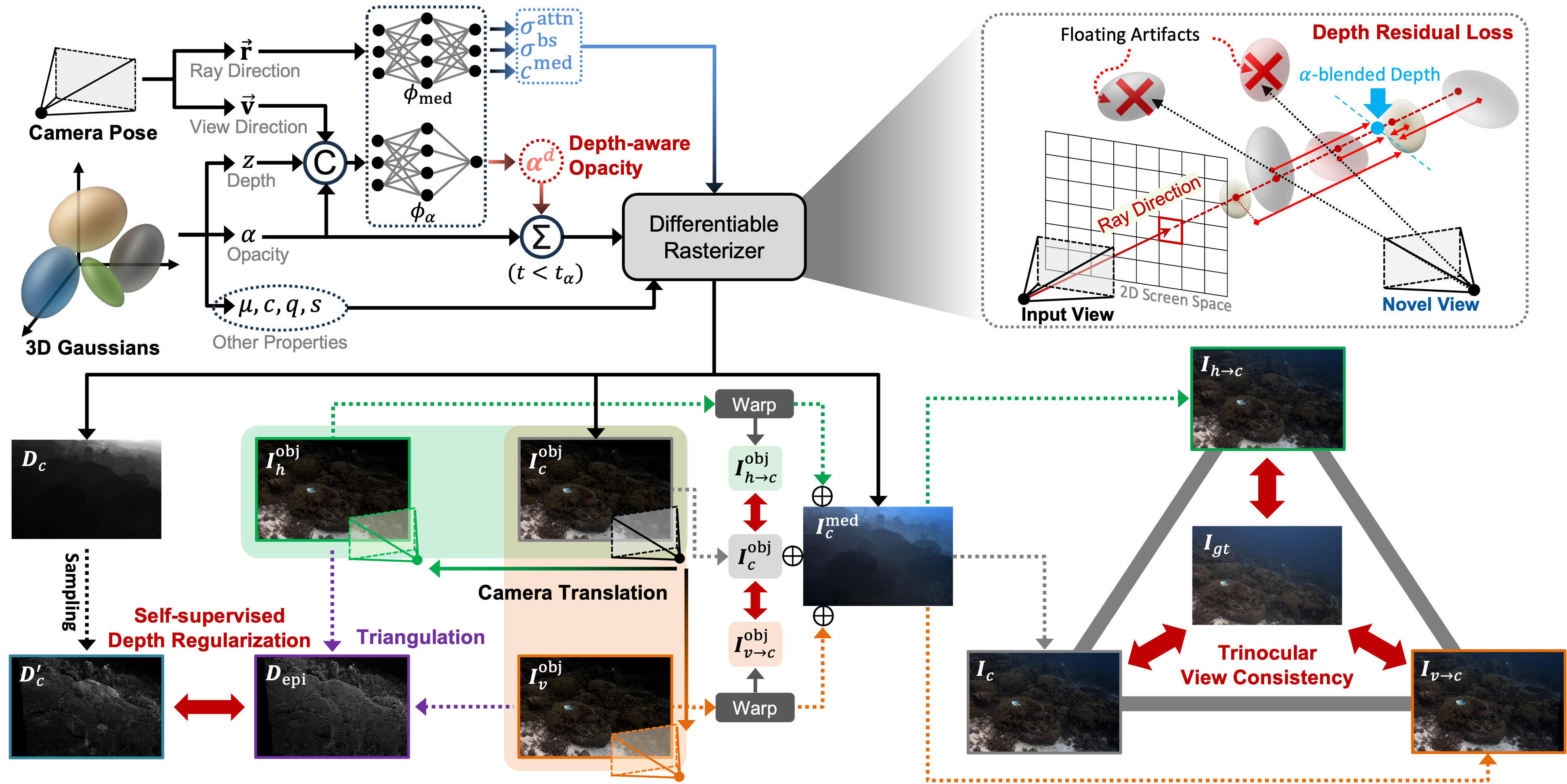

Methodology

Overview of our OceanSplat We enforce trinocular consistency by inverse warping rasterized outputs from two orthogonal views to guide 3D Gaussian placement. From these views, we derive a synthetic epipolar depth prior via triangulation to provide self-supervised geometric constraints. Additionally, depth-aware alpha adjustment suppresses erroneous 3D Gaussians early and aligns rendered depth with the Gaussian z-component to prevent floaters in novel views.

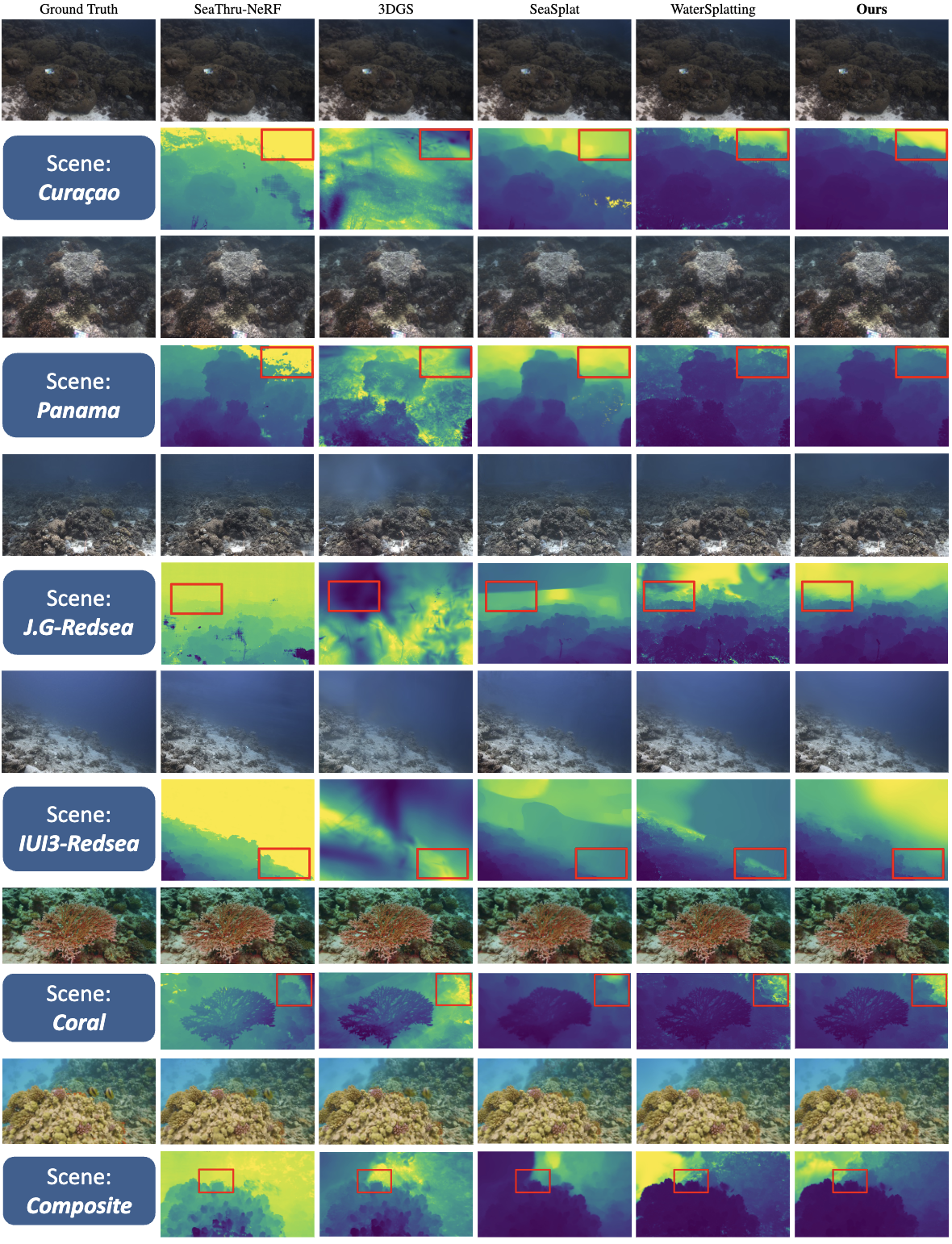

Real-World Underwater Scenes

Qualitative results of novel view synthesis on diverse real-world underwater scenes.

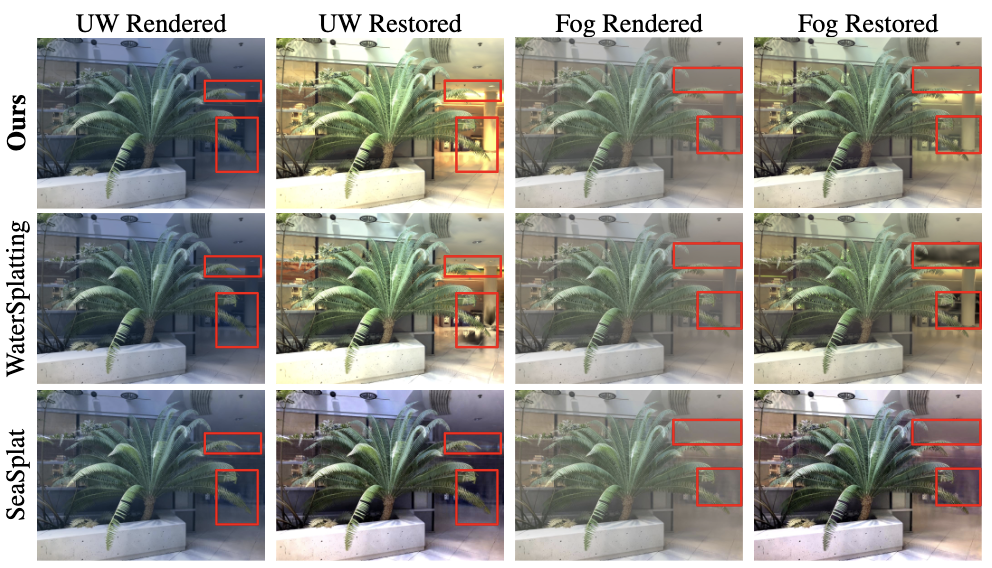

Simulated Underwater and Foggy Scenes

Qualitative results of novel view synthesis and scene restoration on simulated underwater/foggy scenes.

BibTeX

@article{kweon2026oceansplat,

title={OceanSplat: Object-aware Gaussian Splatting with Trinocular View Consistency for Underwater Scene Reconstruction},

author={Kweon, Minseong and Park, Jinsun},

journal={arXiv preprint arXiv:2601.04984},

year={2026}

}